For further information see Parquet Files. The Parquet-format project contains all Thrift definitions that are necessary to create readers and writers for Parquet files.

Subsituted null for ip_address for some records to setup data for filtering.

. Output_date odbget_data dataset_path date-parquet access_keyaccess_key Get the Prescribing Chemical data file. Spark - Parquet files. In a future release youll be able to point your R session at S3 and query the dataset from there.

Big parquet file sample. The block size is the size of MFS HDFS or the file system. Kylo is licensed under Apache 20.

Apache parquet file sample. Contributed by Teradata Inc. Sample snappy parquet file.

Basic file formats - such as CSV JSON or other text formats - can be useful when exchanging data between applications. Removed registration_dttm field because of its type INT96 being incompatible with Avro. - kylouserdata1parquet at master Teradatakylo.

Sample Parquet data file citiesparquet. All files are safe from viruses and adults-only content. Click here to download.

The larger the block size the more memory Drill needs for buffering data. Open an Explorer window and enter TEMP in the address bar. We care for our content.

This repository hosts sample parquet files from here. If you need example dummy files for testing or demo and presentation purpose this is a great place for you. The easiest way to see to the content of your PARQUET file is to provide file URL to OPENROWSET function and specify parquet FORMAT.

All files are free to download and use. How to work with Parquet files using native Python and PySpark. See the following Apache Spark reference articles for supported read and write options.

If clicking the link does not download the file right-click the link and save the linkfile to your local file system. Parquet files that contain a single block maximize the amount of data Drill stores contiguously on disk. Apache parquet sample file download.

Python srcpyspark_csv_to_parquetpy CSV Parquet with Koalas. When the conversion of parquet tables of Hive metatement is enabled the. The New York City taxi trip record data is widely used in big data exercises and competitions.

This is what will be used in the examples. This is not split into seperate areas 275 GB. This will invalidate sparksqlparquetmergeschema.

Python srckoalas_csv_to_parquetpy More info. View the original dataset location and the. This file is less than 10 MB.

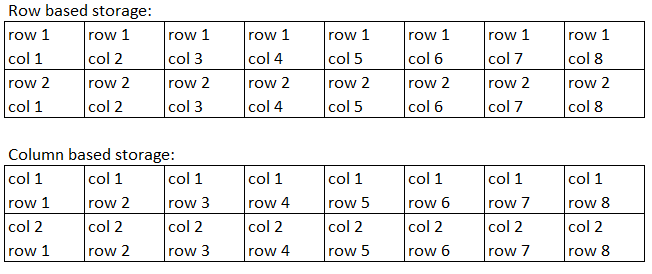

Convert a CSV to Parquet with Pandas. The data was collected and provided to the NYC Taxi and Limousine Commission TLC by technology providers authorized under the Taxicab Livery Passenger Enhancement Programs TPEPLPEP. Parquet is a columnar storage format that supports nested data.

CSV 773 KB. Apache Parquet is a columnar file format that provides optimizations to speed up queries and is a far more efficient file format than CSV or JSON. I have made following changes.

If the file is publicly available or if your Azure AD identity can access this file you should be able to see the content of the file using the query like the one shown in the following example. Test area only area 3. For more details about parquet encryption parameters visit the Parquet-Hadoop configuration page.

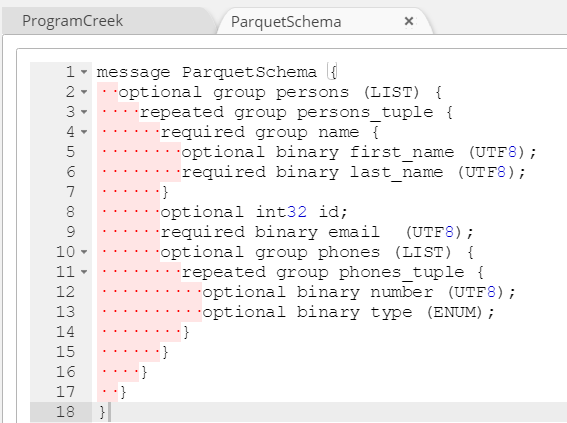

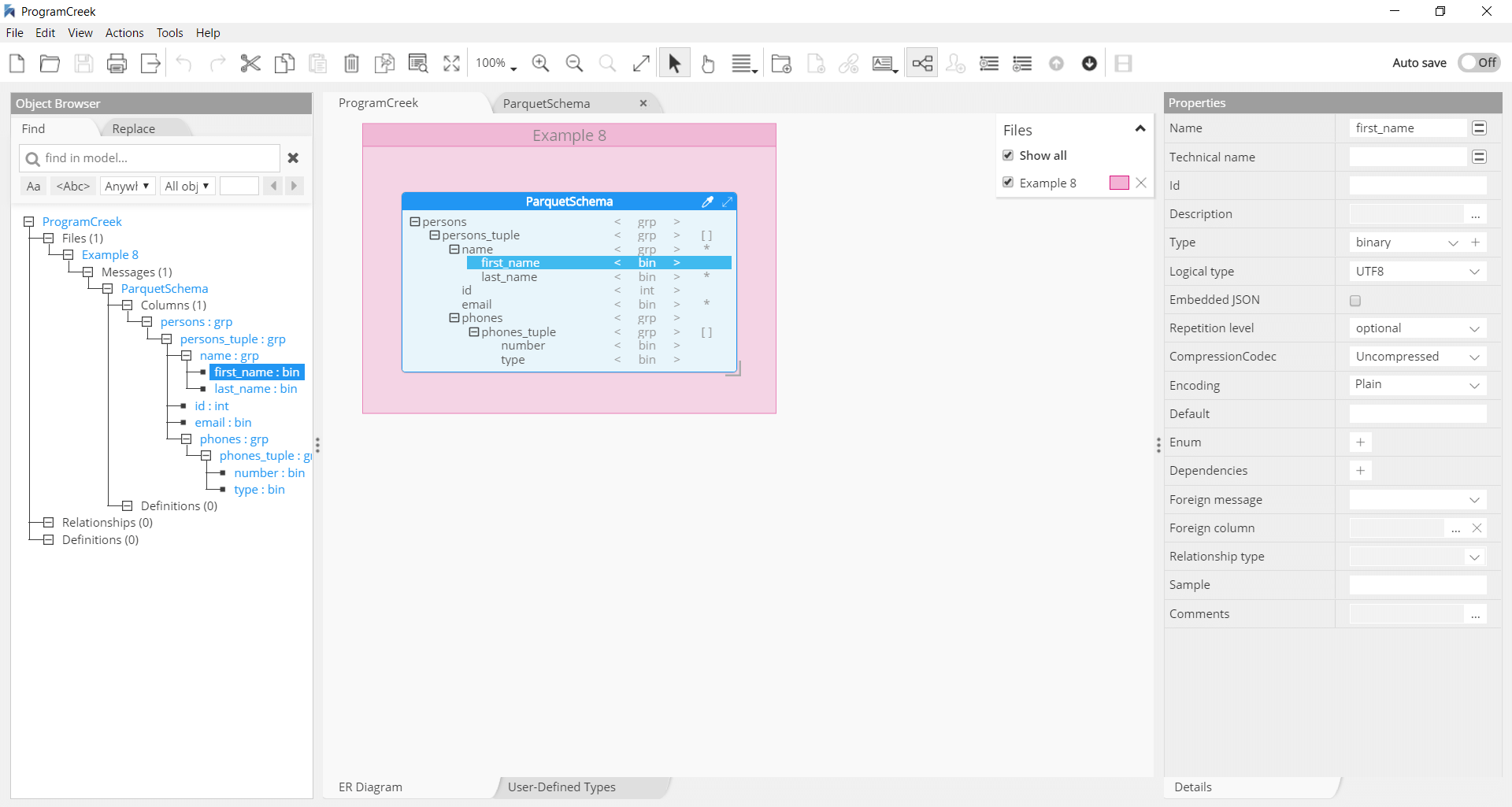

Parquet metadata is encoded using Apache Thrift. Get the Date data file. On the right there are some details about the file such as its size so you can best decide which one will fit your needs.

Download a small sample 19 of the full dataset in parquet format XGB. The files might be useful for testing upload HTML5 videos etc. Kylo is a data lake management software platform and framework for enabling scalable enterprise-class data lakes on big data technologies such as Teradata Apache Spark andor Hadoop.

Python srccsv_to_parquetpy CSV Parquet with PySpark. Below you will find a selection of sample csv document files for you to download. Click here to download.

For information about the format of the files. Configuring the size of Parquet files by setting the storeparquetblock-size can improve write performance. We created Parquet to make the advantages of compressed efficient columnar data representation available to any.

All DPLA data in the DPLA repository is available for download as zipped JSON and parquet files on Amazon Simple Storage Service S3 in the bucket named s3dpla-provider-export. Then copy the file to your temporary folderdirectory. Download the complete SynthCity dataset as a single parquet file.

To get and locally cache the data files the following simple code can be run. In this article. The trip data was not created by the TLC and TLC makes no representations as to the accuracy of these data.

For demonstration purposes we have hosted a Parquet-formatted version of about 10 years of the trip data in a public S3 bucket. When it comes to storing intermediate data between steps of an application Parquet can provide more advanced capabilities. For more details about how to access and download these files from S3 see the S3 documentation.

Support for complex types as opposed to string-based types CSV or a limited.

The Parquet Format And Performance Optimization Opportunities Boudewijn Braams Databricks Youtube

Convert Csv To Parquet File Using Python Stack Overflow

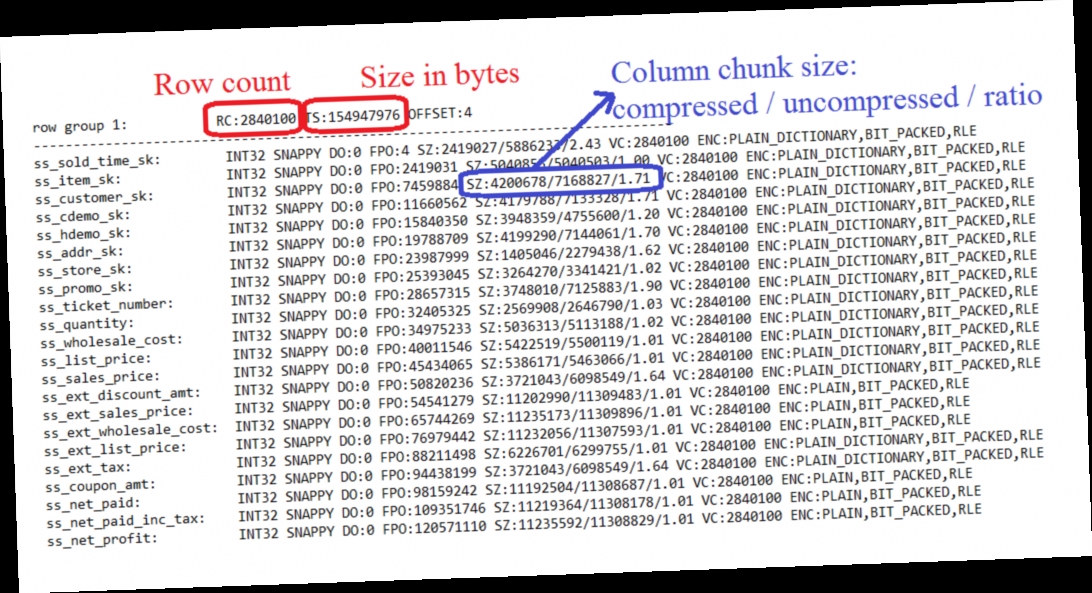

Miscellaneous Tools Parquet Diagnostics Md At Master Lucacanali Miscellaneous Github

Parquet Data File To Download Sample Twitter

How To Generate Nested Parquet File Format Support

Chris Webb S Bi Blog Parquet File Performance In Power Bi Power Query Chris Webb S Bi Blog

0 comments

Post a Comment